Why is our technology vital to the world?

AI and LLMs Limited by Memory Bandwidth

The demand for processing power in High Performance Computing (HPC), Artificial Intelligence (AI) and Machine Learning (ML) is increasing at an unprecedented rate. Moore’s law is aiding the development of processors to meet these demands, however there is an ever-widening gap between the memory bandwidth capabilities used in these systems and the requirements from Large Language Models (LLMs). In fact the parameter count in LLMs grew over 100,000 times quicker than GPU HBM memory bandwidth over the last six years.

While electrical interconnects work well over short distances of a few millimeters, they face fundamental limits in terms of reach, size and power efficiency, especially at higher data rates and longer distances. Therefore, there is a need for low power, small, fast link technologies at a reasonable price point to overcome these challenges.

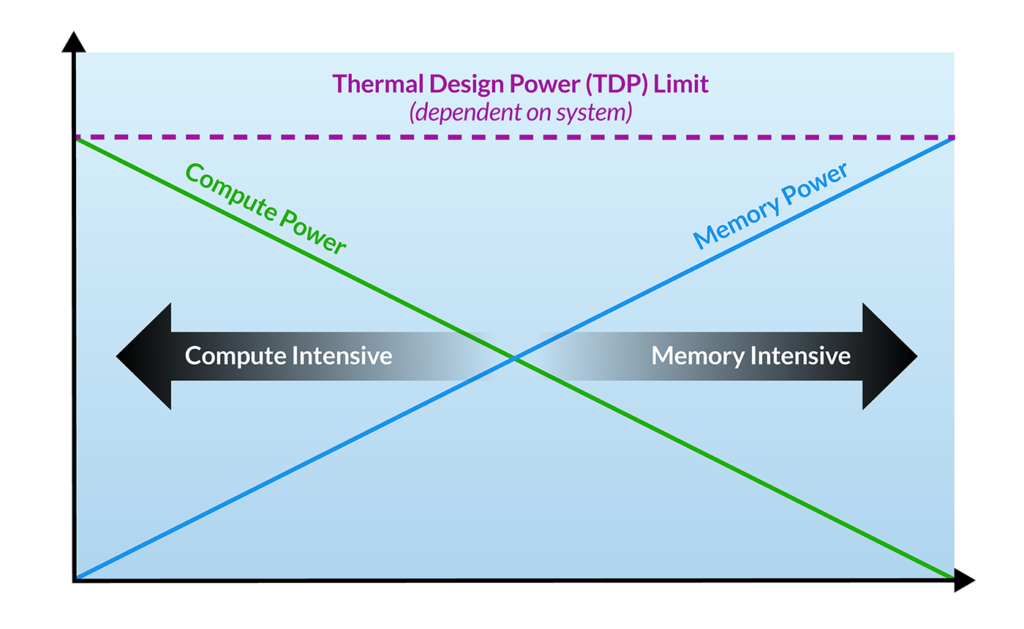

Memory Capacity and Bandwidth Limited by Thermal Design Power

Every design or application has a Thermal Design Power (TDP) Limit associated with the package. The TDP can be split between compute or memory power, but the sum of each cannot exceed the TDP.

Some applications require more memory power and others require more compute, but the TDP cannot be exceeded.

With AI, ML and HPC the requirements for more memory capacity (and bandwidth), result in a conflict, where the use of more memory reduces the compute power, resulting in a compromised performance.

One way to overcome this is to increase the TDP, by moving the memory outside of the package, but this is only beneficial if the co-located interface of the interconnect consumes less power than the HBM and if the increase in performance outweighs the cost of the increase.

This can be achieve by using Avicena’s LightBundle™ optical interconnects. By off-boarding the memory, onto a different package the effective TDP can be increased for the compute and memory, thereby enabling more compute power and more memory power to increase memory bandwidth and capacity.

How do we increase bandwidth and capacity?

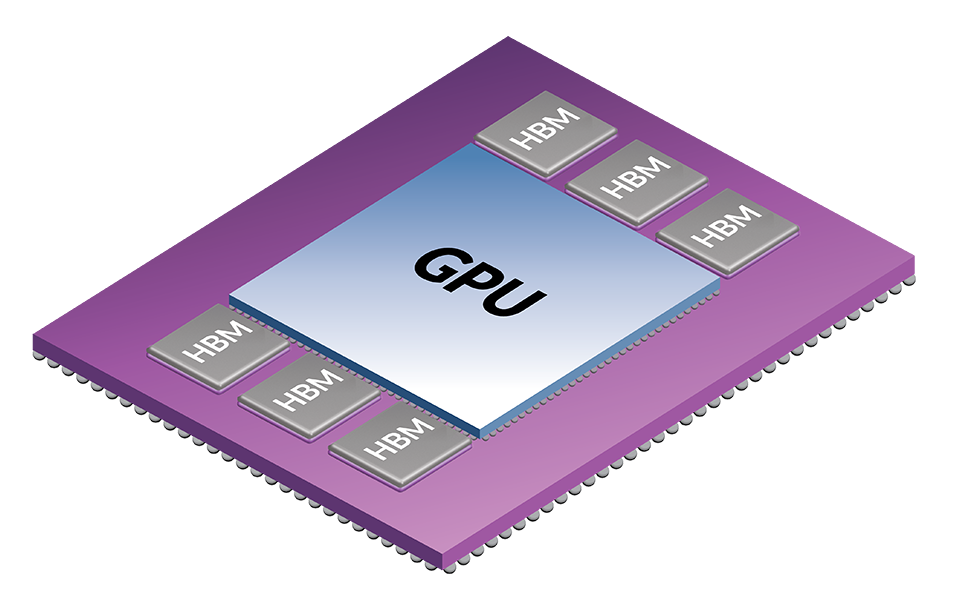

High-performance GPU solutions implement High Bandwidth Memory (HBM) in package, instead of DDR based memory and the architecture of HBM allows for a significant increase in data transfer rates compared to traditional memory architectures.

This additional bandwidth is crucial for applications such as high-end graphics processing, artificial intelligence, and scientific computing. However, there are still limitations within this architecture. The interconnects between the HBM and GPU are copper, with the HBM stacks co-located on the Silicon Interposer with a finite space, resulting in the bandwidth being limited by the beach front area.

The GPUs also operate at elevated temperatures, which is far from ideal for the HBM.

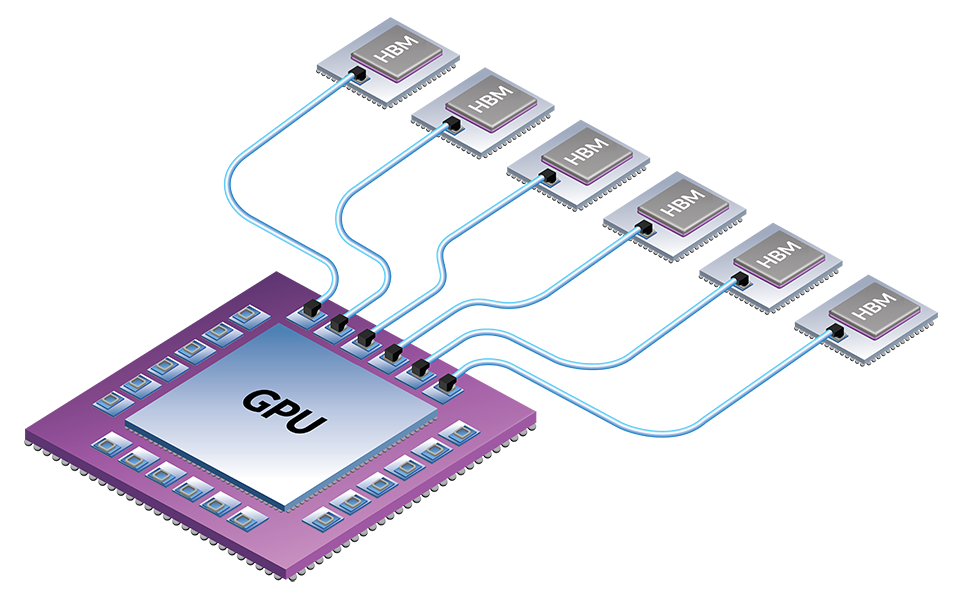

Avicena’s µLED interconnects enable remote HBM

Avicena’s µLED interconnects enable remote HBM, thereby eliminating the space constraints of the beachfront and moving the HBM stacks away from hot GPUs.

Avicena’s LightBundle solution comprises of a LightBundle IC with a LightBundle coupler that is 3D stacked onto an advanced CMOS IC. This allows for increased data throughput and density at very low energy.

The solution is compatible with numerous IC processes and foundries and can be placed on any IC device and also any location on the IC.

How Does Our Technology Work?

LightBundle™ — Using microLEDs to “Move Data”

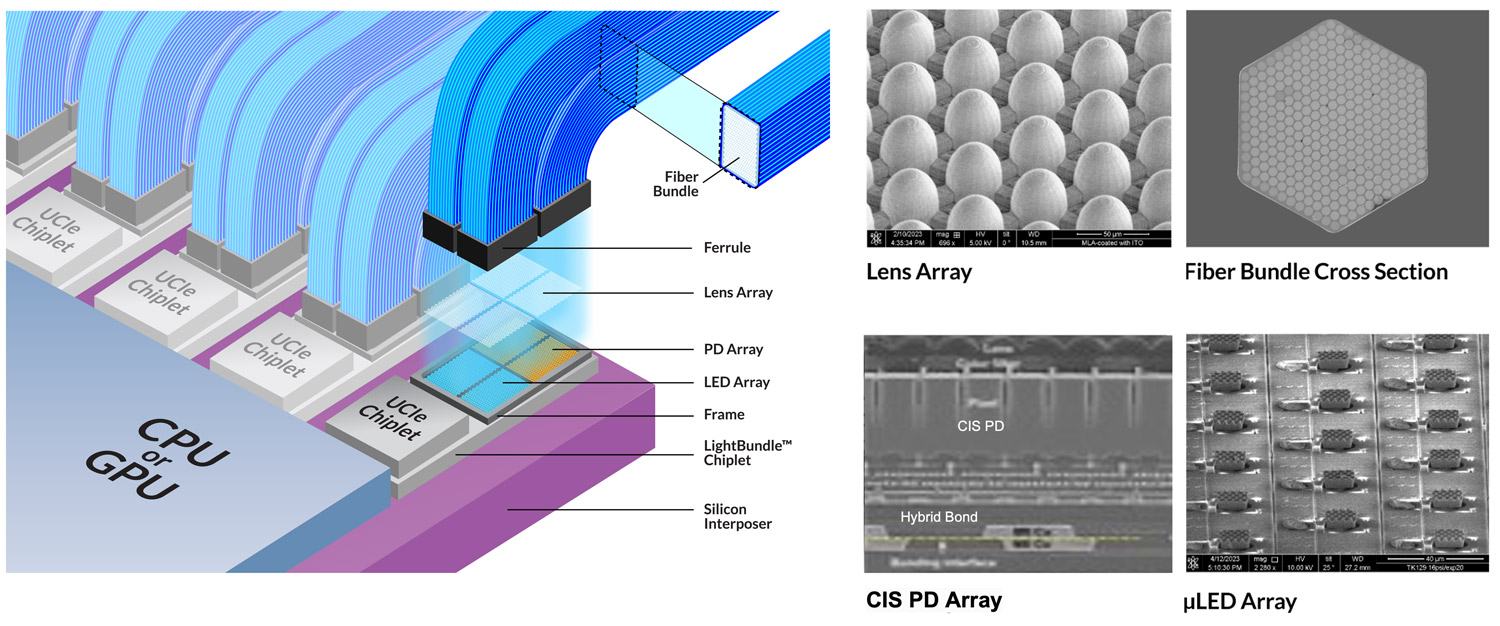

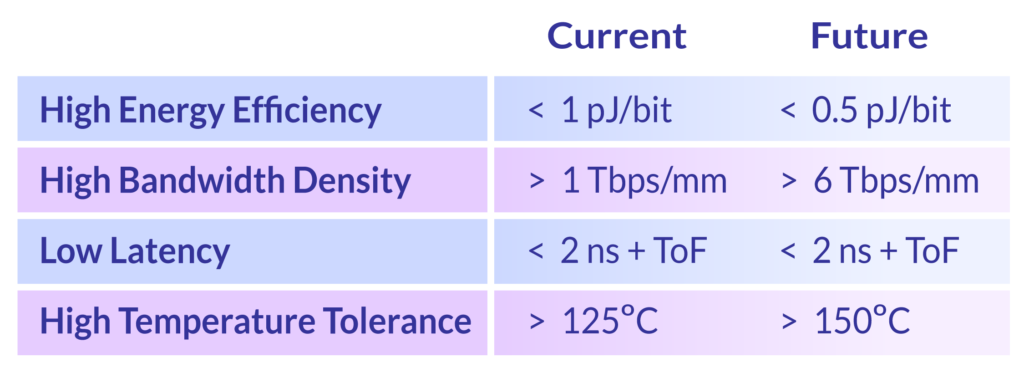

Avicena’s LightBundle™ interconnects use arrays of microLEDs connected via multi-core fiber bundles to Si Detectors on CMOS ICs. This enables ultra-low power links of < 1pJ/bit with up to 10m reach.

The parallel nature of the LightBundle interconnect is well matched to the wide internal bus architecture of ICs like CPUs, GPUs or switch ASICs, therefore eliminating the need for a power intensive SerDes interface.

Learn more about how Avicena LightBundle™ technology can help solve the power challenge in AI/ML HPC.

How do we achieve world leading bandwidth density?

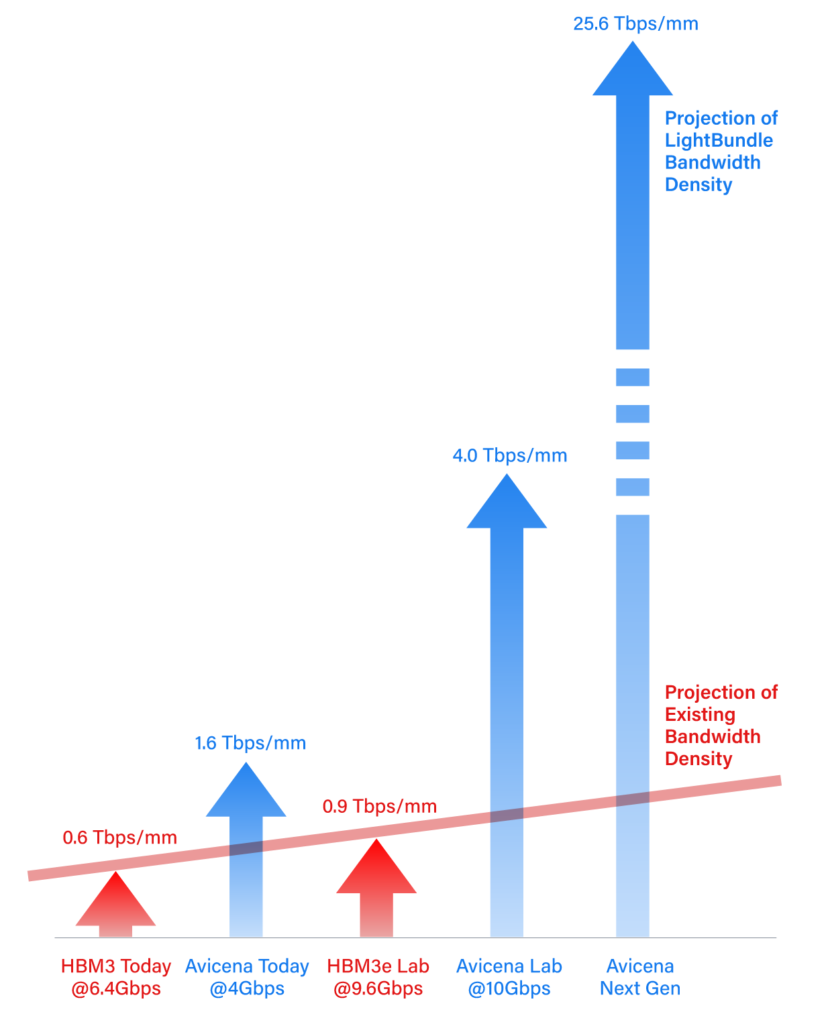

2D µLED Array Enables Area Bandwidth Density of >25Tbps/mm

By using 2D µLED arrays it is possible for the IO to cover the entire IC area, thereby increasing the BW density.

Avicena’s next generation products will enable Bandwidth densities in excess of 25Tbps/mm.

To achieve this world leading bandwidth density, Avicena is taking a three step approach:

1st Step: Available today is a 4Gbps/lane, 50µm lane spacing solution, providing 1.6Tbps/mm

2nd Step: Under evaluation in the lab is a 10Gbps/lane, 50µm lane spacing solution, providing 4.0Tbps/mm

3rd Step: In the near future and under development Avicena will provide the next generation products with 16Gbps/lane, 25µm lane spacing, providing 25.6Tbps/mm

The solution is compatible with numerous IC processes and foundries and can be placed on any IC device and also any location on the IC.

The LightBundle is aligned to the parallel architecture of the GPU (a large number of lanes transmitting at relatively slow speeds), therefore allowing a 1-to-1 interface eliminating the need for a power intensive SerDes interface.

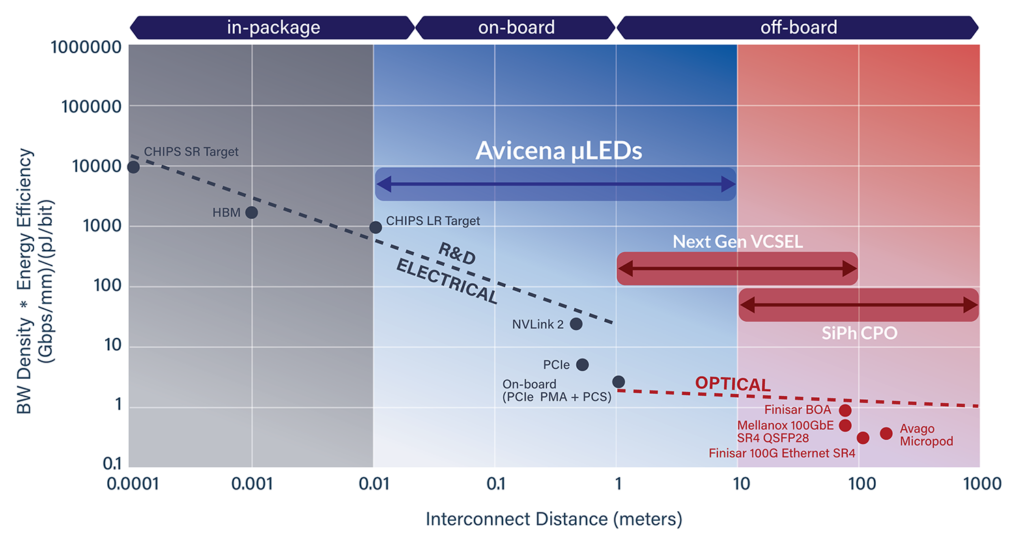

Avicena LightBundle™ Power and Density are >10x Better than Existing Solutions

The performance of an interconnect can be expressed in terms of a figure of merit (FoM) combining bandwidth density on the edge of chip (Gbps/mm) with energy efficiency (pJ/bit). This FoM is dependent on reach.

In the chart below the LighBundle based interconnects match the performance of electrical links whilst increasing the reach from a few mm up to about 10m thus allowing much more efficient disaggregation of HPC and AI architectures.

Avicena Technology Highlights

Leveraging microLEDs, Avicena has pioneered a transformative interconnect technology designed for super-high bandwidth and low-power optical connections.

The parallel nature of the LightBundle interconnect with relatively low data rates per lane is well matched to the wide parallel architecture of the internal buses in processor IC and switch ASICs. This approach eliminates the need for complex, power hungry SerDes typically found in conventional optical links where the goal is always to minimize the number of costly and power-hungry laser modules.

The innovative LightBundle solution is applicable for a wide range of applications and is easy to seamlessly integrate into any CMOS IC to make the world’s communication and compute more efficient.