Articles

The History of Artificial Intelligence

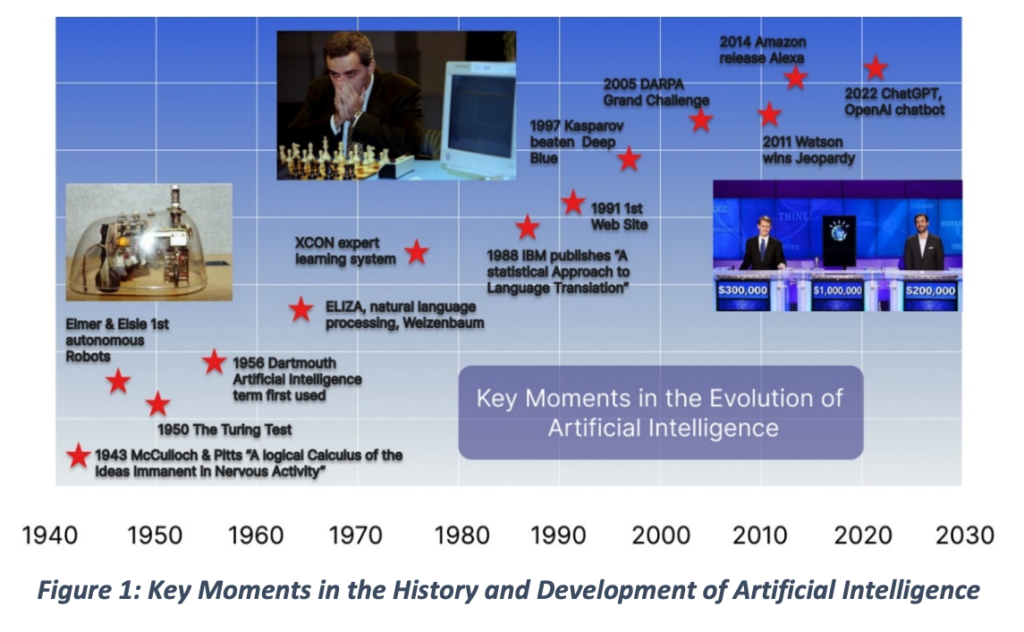

In recent years, Artificial Intelligence (AI) has taken centre stage in the tech industry, emerging as a ubiquitous term. Despite its recent surge in popularity, the concept has been in existence since 1955 when JohnMcCarthy organized a workshop on artificial intelligence at Dartmouth College. However, the true viability of AI, has only manifested in the last few years, fueled by advancements in recent technologies such as GPUs, the internet, and Large Language Models (LLMs).

AI is no longer confined to theoretical discussions but has become an integral part of our daily lives. From supermarkets and suppliers predicting consumer behaviour to the more personal task of eliminating an ex-boyfriend/girlfriend from a holiday photograph, AI is gradually becoming seamlessly integrated into almost every aspect of our routines. Whether we embrace it or not, AI adoption is accelerating rapidly. Even in instances where it operates discreetly, AI influences our experiences—targeting web advertisements on social media, predicting behaviour on gambling websites, enabling facial recognition in security systems, and facilitating support and advice through chatbots. The pervasive nature of AI underscores its transformative impact on diverse aspects of modern life.

Factors Driving the AI Explosion

While AI research and development has a long history, the past few years have witnessed an unprecedented surge in advancement and widespread adoption of AI. This article illustrates the pivotal factors that have markedly fueled this growth and progress in AI throughout the 2000s.

The main key factors, that have energized the surge in AI, are described below and it is the convergence of these factors that have created a perfect storm for the rapid growth and adoption of AI technologies in the 2000s and set the stage for the continued expansion of AI applications in subsequent years.

Increase in Computing Power:

The 2000s saw a substantial increase in computing power, driven by improvements in hardware, especially the development of more powerful and efficient processors. This increase in computational capabilities allowed researchers to train larger and more complex AI models.

Availability of Large Datasets:

The growth of the internet and digital technologies led to the generation and accumulation of massive datasets. In AI, especially in machine learning and deep learning, having access to vast amounts of data is crucial for training models. The availability of large datasets facilitated the development of more accurate and sophisticated AI algorithms.

What is Natural Language Processing and Why is it Important?

Natural Language Processing (NLP) is a subfield of AI that deals with interactions between computers and human languages. NLP algorithms help computers understand, interpret, and generate natural language.

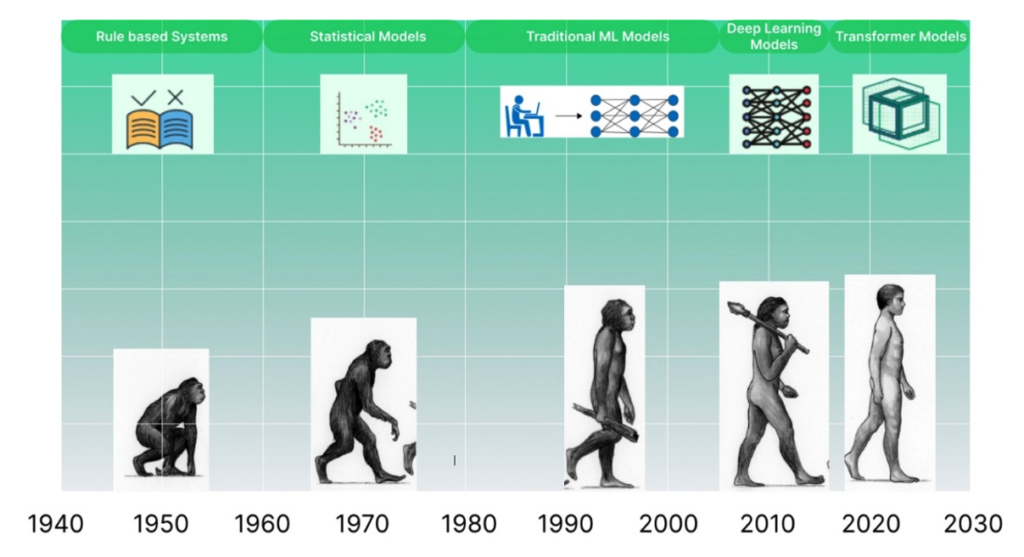

NLP's inception dates back to the 1950s, and in these early models were based on by rule-based systems that relied on manually crafted rules to process and translate language. The primary application of these systems was mainly translation services. Notably, in 1954, IBM achieved a milestone by utilizing a computer to translate 60 Russian sentences into English through a set of meticulously designed rules.

Moving into the 1970s and 1980s, a paradigm shift occurred with the rising prominence of statistical models and machine learning algorithms. During this period, one noteworthy model was the Hidden Markov Model (HMM), which found early success in speech recognition applications. This transition marked a pivotal moment in the evolution of NLP, paving the way for more dynamic and data-driven approaches in language processing.

AI Applications

AI is deployed across diverse industries with the primary goal to enhance efficiency and alleviate the workload on human workers.

The transformative impact of AI is evident in its widespread application across various sectors and below are examples of applications where artificial intelligence can be harnessed, and tailored to meet the unique demands and challenges of their respective industries:

Education:

AI programs are increasingly utilized for tasks such as grading students' assignments and providing a tailored learning experience. Platforms like Carnegie Learning leverage AI to furnish students with personalized feedback on their work, along with customized testing and learning modules.

Healthcare:

AI serves a crucial role in monitoring, diagnosing, and treating patients. Platforms such as IBM Watson Health empower medical professionals by allowing them to articulate queries in natural language, receiving swift responses.